Many DJs are now using synthesizers as MIDI controllers to shape sounds and drive external gear during live performances.

But can synthesizers effectively take the place of traditional MIDI control surfaces?

Let’s dive in and explore the capabilities and limitations of using your synth as a central MIDI hub.

Yes, Synthesizers Can Be Used As MIDI Controllers

The short answer is yes – synthesizers are absolutely capable of serving as MIDI controller devices.

When connected to standard MIDI ports, a synthesizer can transmit MIDI data like notes, continuous controller messages, program changes etc.

to external sound modules and software instruments.

This allows real-time manipulation of parameters for MIDI driven sounds using the synth components.

We’ll explore the how and why in more detail below.

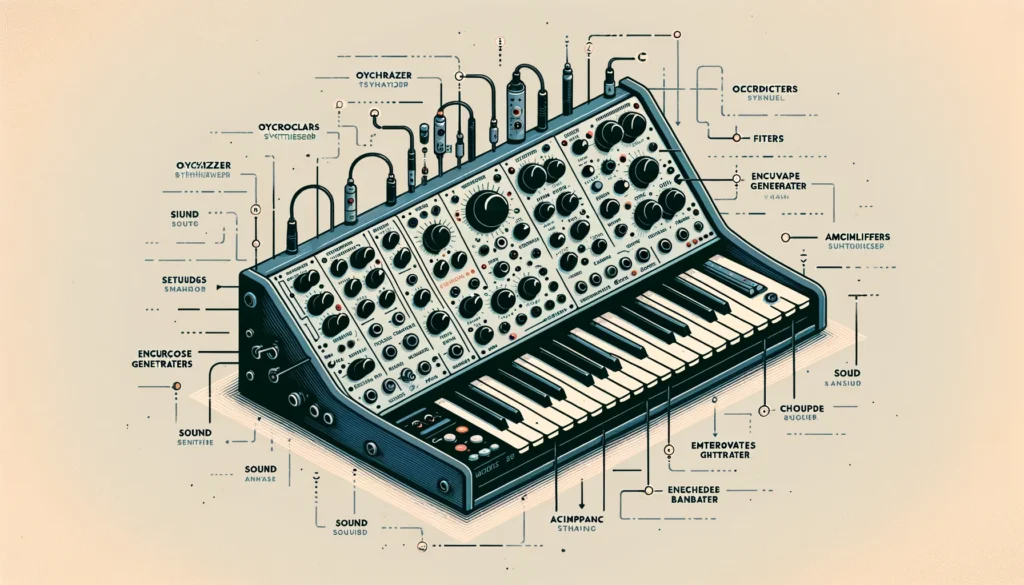

What is a Synthesizer?

A synthesizer is an electronic musical instrument that is capable of generating a wide range of sounds using various sound generation and signal processing techniques.

The unique characteristic of a synthesizer compared to other musical instruments is that it does not rely on the physics of resonant vibrating strings, air columns, or membranes to produce tones.

Instead, a synthesizer utilizes components like oscillators, filters, envelope generators and amplifiers powered by electricity to generate and mold sounds.

The most fundamental part of a synthesizer is the oscillator section.

An oscillator generates a repetitive electronic waveform at an adjustable frequency, which determines the pitch of the sound.

Some of the common waveform shapes include sine, sawtooth, triangle and square waves.

The oscillator waveforms pass through additional sound sculpting devices like filters, amplifiers and envelope generators which shape the harmonic content and amplitude profile over time.

Filters remove certain frequencies from a sound based on a cutoff setting.

A low pass filter gradually eliminates higher frequencies while allowing lower frequencies to pass through.

Envelope generators modulate sound parameters using four stages – attack, decay, sustain and release (ADSR).

This imparts an amplitude and tonal evolution giving the otherwise static electronic waveforms more dynamic expression.

The shaped sound finally reaches voltage controlled amplifiers which determine the output volume of the generated sound.

Synthesizers may implement the sound generation and manipulation circuits using analog, digital or software techniques.

Analog synthesizers use real electronic circuits consisting of physical components like resistors, capacitors, transistors and integrated chips.

They generate warmer, organic sounds rich in harmonics but can drift out of tune over time.

Digital synthesizers use microprocessors, digital signal processing (DSP) chips and software algorithms to generate and process sound.

They offer more precision and recallability but may suffer from an overly ‘clinical’ sound.

Hybrid synthesizers provide the ‘best of both worlds’ by combining analog sound generation with digital control and stability.

Finally, software synthesizers run entirely ‘in-the-box’ within music production environments on a computer.

They provide ease of use through point-and-click graphical interfaces.

What is MIDI?

MIDI stands for Musical Instrument Digital Interface.

It is an industry standard communication protocol that enables electronic musical instruments and digital devices to interact with each other.

The MIDI specification was published in 1983 and allows interconnected electronic components to synchronize with each other and exchange performance data.

This facilitates an integrated music production and performance ecosystem using MIDIcapable instruments from different manufacturers.

Unlike analog audio signals, a MIDI connection does not actually transmit sounds directly in the form of electronic waveforms.

Instead, MIDI sends coded digital data messages that relay musical performance instructions.

For example – MIDI notes, controller changes, clock signals and other event data in the form of standardized numeric messages.

This performance information decoded at the receiving end triggers playing of notes, modifications to sound parameters and synchronization behaviors with precision timing.

Some typical MIDI messages include Note On/Note Off messages which activate and halt individual note events, Controller Change messages which manipulate sound parameters like volume or panning, Program Change messages which select sound presets and Clock messages which set tempo.

The numeric Controller Change messages correspond to control components on MIDI instruments like knobs, sliders or wheels.

The extensive MIDI specification supports up to 128 programmable notes, 127 different velocity levels, 128 distinct controllers and 16 channels of data – enablingnuanced articulation and layers of complexity in performance data transmission.

MIDI connections on devices are enabled through specialized ports, cables and interfaces.

The classic MIDI ports used 5-pin DIN connectors and cables to create 16 independent MIDI channels for data transfer.

Modern computers use USB ports and USB MIDI interfaces to connect instruments and route MIDI messages to music software digital audio workstations.

Wireless MIDI solutions are also available using radio frequency and infrared waves for cable-free operation.

The versatile MIDI standard truly brought electronic musical systems together into a tightly integrated ecosystem.

Using a Synthesizer as a MIDI Controller

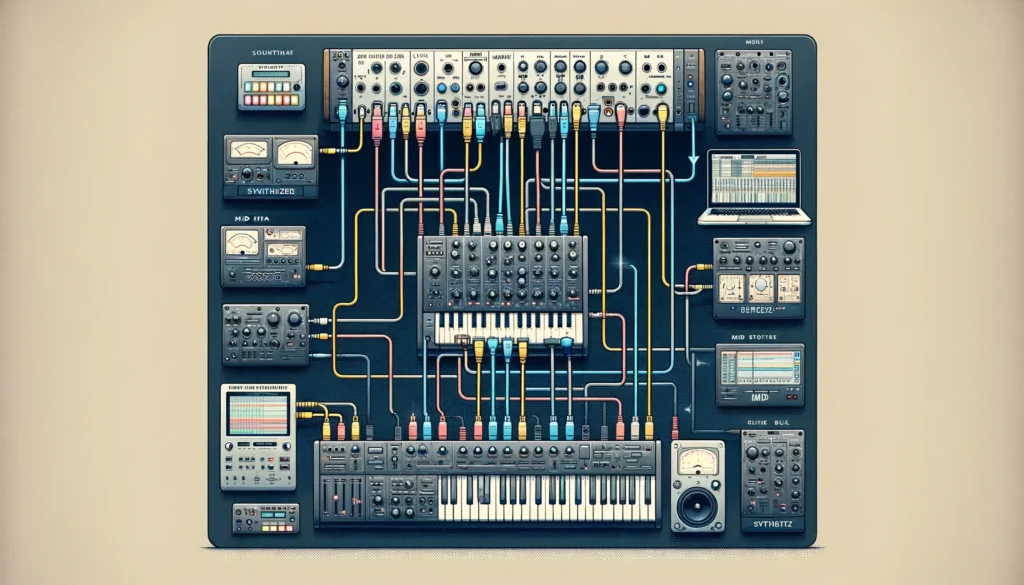

Connecting a Synth to a MIDI Interface

In order to leverage the communication and control capabilities offered by the MIDI protocol, the synthesizer first needs to be connected to a computer or another MIDI device using appropriate MIDI ports and cables.

Most synthesizers are equipped with standard MIDI In/Out ports on 5-pin DIN connectors into which a MIDI cable can be plugged.

The other end of this MIDI cable goes into the In/Out ports of a MIDI interface connected to a computer.

This interface converts the MIDI signals into a format recognizable by the computer which is running a Digital Audio Workstation (DAW) software or a sound generating application.

Alternatively, many synthesizers also offer a USB port which allows a direct connection through a USB cable into the computer.

Drivers may need to be installed on the computer for this synthesizer to be recognized properly.

Using a class compliant USB MIDI setup avoids driver issues and complex configurations.

Once connected successfully over traditional MIDI ports or USB, the synthesizer is able to exchange MIDI performance and control messages with the software instruments residing within the DAW or sound design application.

The synthesizer keyboard, knobs, ribbon controllers and other components can manipulate various sound parameters or trigger notes on the external MIDI devices.

Controlling External MIDI Devices

The MIDI messages transmitted by the synthesizer contain encoded performance instructions which can manipulate sounds being generated from an external hardware sound module or software plugin instrument.

For example, playing notes on the synthesizer keyboard sends MIDI Note On/Note Off messages to play corresponding pitched sounds on the target device.

Similarly, turning knobs which control filter cutoff or resonance on the synthesizer can transmit MIDI Control Change messages.

These can then modify the tone or harmonics of the sound being produced by the external MIDI tone generator in real time.

Using this communication mechanism, the synthesizer effectively shapes the synthesizer controls the parameters of the target sound as it is being created instead of manipulating a static sound reproduction.

This helps recreate the intimatela tactile experience of shaping an instrument sound onstage during a live performance.

In the music production studio setting, it facilitates controlling various software synth parameters like oscillators, LFOs.

envelopes directly from the master keyboard without needing to use the mouse.

This helps speed up sound design experimentation and encourages happy accidents through spontaneous tweaking!

Multiple external MIDI hardware sound modules or software instruments can be daisy chained together to form a stacked synthesizer setup.

The central controller synthesizer keyboard is then able to play different parts such as basslines, accompaniment and leads selectively across the various sound generator units.

This facilitates splitting or layering arrangements across multiple sounds for increased flexibility.

The MIDI synthesizer controller acts as the central hub from which the entire electronic orchestra gets its instructions!

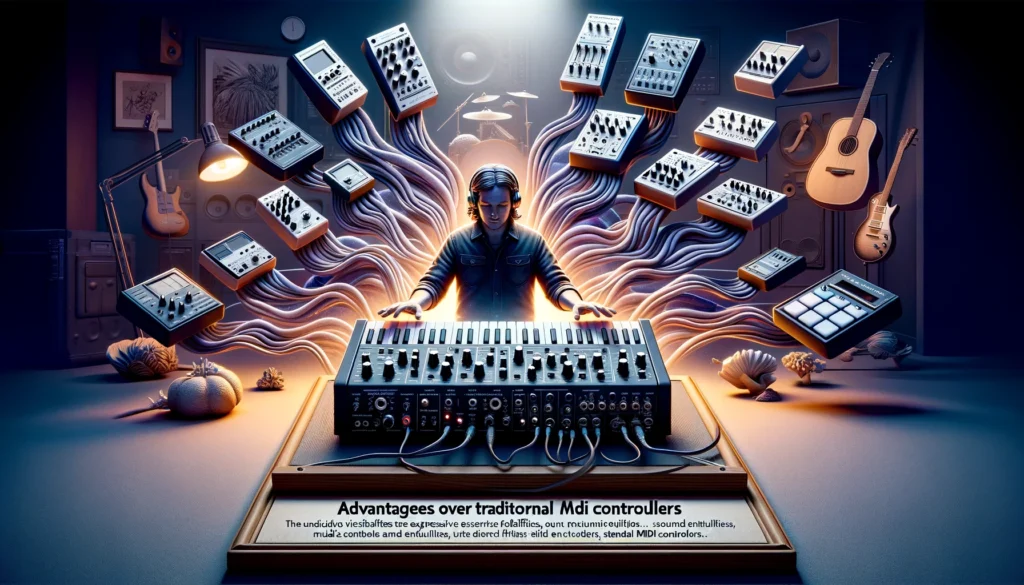

Advantages Over Traditional MIDI Controllers

While traditional MIDI keyboards and pad controllers offer great ways to trigger and manipulate MIDI equipped gear, synthesizers provide certain additional advantages thanks to their sound generation capabilities.

Since synthesizers are self-contained tone generation instruments in their own right, they provide players with the unique experience of directly hearing the sounds being sculpted while the performance controls are being tweaked in real-time.

For example, snappy velocity sensitive keyboards, aftertouch implementation, realistic string ensemble textures and accurate ribbon controllers ormod wheels on synthesizers provide much more tactile and responsive playing experience than generic MIDI keyboards.

Performance gestures on the synthesizer immediately impact the produced sound which drives further inspiration and organic interplay.

This real-time sound reinforcement encourages experimentation and happy accidents which makes using synthesizers for MIDI control during music production or live playing scenarios extremely gratifying.

Another advantage stems from additional sound manipulation components like filters, envelopes, LFOs and sequencers exclusively found on synthesizers.

These powerful sound shaping tools provide deeper and more direct ways of morphing the target MIDI controlled sound compared to generic sliders and knobs on MIDI controllers.

For example, sweeping the synthesizer filter cutoff or resonance alters harmonics in expressive ways.

Sequencing arpeggiator and modulation patterns from the synthesizer further animated the MIDI sound.

These continuous controls encourage dynamic sound exploration compared to the static nature of buttons and pads on most MIDI gear.

Limitations to Consider

While synthesizers make amazingly versatile MIDI controllers, it helps to be aware of some limitations compared to traditional MIDI keyboards and controllers.

The most significant limitation is that the complexity of synthesizer interfaces does not always map intuitively to parameters on target MIDI devices.

For example, while the filter cutoff knob on the synth impacts its internal sound engine directly, this will not necessarily control a ‘filter cutoff’ on the MIDI device.

Complex routing matrices and synths with deep modular interfaces may need extensive MIDI mapping to channel controls to desired destinations.

Onboard displays, MIDI mapping capabilities and interactive software editors can help mitigate this issue.

However some level of trial and error is needed when controlling external MIDI gear from synthesizers as advanced capabilities may not translate directly.

Simpler synthesizers and those implementing the classic ADSR envelope paradigm tend to have more success driving MIDI gear consistently.

Another drawback is that MIDI introduces noticeable latency and lags, especially when using slower legacy MIDI interfaces and long daisy-chained cable connections between multiple devices.

Analog control voltage connectivity on some synth models provides more responsive performance than MIDI but works over shorter cable distances.

Finally, some low bandwidth legacy MIDI implementations lack advanced features like parameter feedback for bi-directional communication between devices which reduces the degree of real-time expressiveness.

Conclusion

In conclusion, despite some limitations compared to dedicated MIDI controllers, synthesizers make amazingly versatile central hubs for controlling and manipulating MIDI driven gear, both in the studio and on stage.

With the right connectivity setup and MIDI mapping, synth players can shape external sounds in expressive ways while benefiting from the real-time sound reinforcement from the same instrument.